Depending on your needs using polymorphism might benefit different aspects of the project. For example, having a limited class hierarchy might make your code cleaner and more expressive in contrast to a single class having nullable fields or a type label. And it only seems natural to use it with document-oriented databases like MongoDB. But if you want to have a document with polymorphic fields using MongoDB and Spring Data you might face an exception similar to this: MappingInstantiationException: Failed to instantiate ...FieldType using constructor NO_CONSTRUCTOR with arguments. Let’s see why this happens and how to fix that issue properly.

Please, keep in mind all examples below are in Kotlin.

The issue

Let’s imagine we have an app that uses Spring Data and MongoDB. Within our app we have a simple document – DocumentWithInterfaceField:

@Document("document")

@TypeAlias("document")

data class DocumentWithInterfaceField(

@Field("interface_field")

val interfaceField: FieldType,

@Id

val id: ObjectId = ObjectId.get(),

)

sealed interface FieldType {

@get:Field("some_int_field")

val someIntField: Int

@TypeAlias("field_type_impl")

data class FieldTypeImpl(

override val someIntField: Int = 123,

@Field("some_string_field")

val someStringField: String = "qwe",

) : FieldType

@TypeAlias("other_field_type_impl")

data class OtherFieldTypeImpl(

override val someIntField: Int = 456,

@Field("other_string_field")

val otherStringField: String = "asd",

) : FieldType

}

Code language: Kotlin (kotlin)Full example is available on GitHub.

Let’s walk through this class. The document itself only has 2 fields: interfaceField and id, where id is a BSON object id and interfaceField is of type FieldType.

FieldType is a sealed interface having 2 implementations: FieldTypeImpl and OtherFieldTypeImpl. Both implementations share someIntField and have 1 string field. In case of FieldTypeImpl the field is called someStringField, and in case of OtherFieldTypeImpl the field is called otherStringField.

So the 2 implementations are different and one cannot be deserialized from the other.

Both implementations are also annotated with @TypeAlias.

Looks good! Now let’s declare a repository for the document.

interface DocumentRepository : MongoRepository<DocumentWithInterfaceField, ObjectId>Code language: Kotlin (kotlin)Default CRUD repository will work just fine for our use case.

And we will also need to configure our app to work with MongoDB.

MongoDbConfig

@Configuration(proxyBeanMethods = false)

@EnableMongoRepositories(

basePackageClasses = [DocumentRepository::class]

)

@EnableConfigurationProperties(MongoProperties::class)

class MongoDbConfig : AbstractMongoClientConfiguration() {

@Autowired

private lateinit var mongoProperties: MongoProperties

override fun getDatabaseName() = mongoProperties.dbName

override fun mongoClient(): MongoClient {

val connectionString = ConnectionString(mongoProperties.uri)

val mongoClientSettings = MongoClientSettings.builder()

.applyConnectionString(connectionString)

.build()

return MongoClients.create(mongoClientSettings)

}

}

@ConstructorBinding

@ConfigurationProperties("mongo")

private data class MongoProperties(

val dbName: String = "spring_data_mongo_issue",

val uri: String,

)

Code language: Kotlin (kotlin)Here we configure mongo client according to the Spring docs. If you’re interested in reading more about mongo configuration make sure to also check this article.

Let’s quickly check what happens in this configuration:

L2: Enable Mongo repositories (so that Spring will create repository beans for us).L5: Enable configuration properties (so that we can configure the DB name and connection url).L11: Configure database name using the properties.L13: Configure mongo client to use connection string from the properties.

Nothing fancy, you can check the full example on GitHub.

Now that we have everything set up, let’s write a test for the repository.

Repository test

We want to have an integration test that will use a real MongoDB instance so that we can make sure our repository works fine in real life (aka prod). The easiest way to have a real DB instance is to use a MongoDB container with testcontainers. I will skip the setup details here, but you can check that on GitHub.

class SaveAndGetWithRepositoryTest @Autowired constructor(

private val repository: DocumentRepository,

) : AbstractRepositoryTest() {

@TestFactory

fun `should save the document and then get it`() = sequenceOf(

DocumentWithInterfaceField(FieldTypeImpl()),

DocumentWithInterfaceField(OtherFieldTypeImpl()),

).map { document ->

dynamicTest("should save $document and then get it") {

// given

repository.save(document)

// when & then

expectCatching {

repository.findById(document.id)

}.isSuccess().isPresent() isEqualTo document

}

}.asStream()

}

Code language: Kotlin (kotlin)This test is a dynamic test. Don’t worry if you’re not familiar with what dynamic tests are, basically what happens here is:

L7-8: We create 2 instances ofDocumentWithInterfaceField, one withFieldTypeImplinstane and one withOtherFieldTypeImpl.L10: For each of those 2 instances we create a dynamic test.L12: Inside the test we save the document first using the repository.L16: Then we try to get it by id from the DB.L17: If we got it successfully we check that the document we got from the DB is equal to the original document.

You can check the full example here.

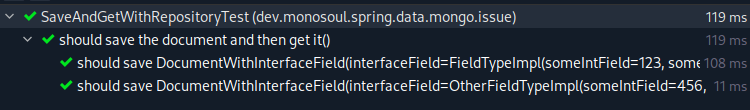

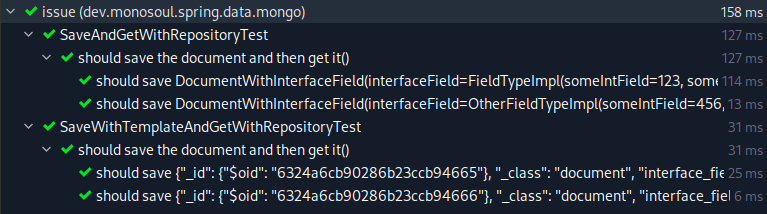

Let’s try to run this test.

Okay, looks good to me! Let’s deploy it!

A wild MappingInstantiationException appears

Imagine we have deployed the app to the development environment and now checking everything works there.

We have tried to create a new document and save it – that worked.

We have tried to get the document from the DB – that also worked.

Cool, seems like we did a good job here! Until at some point we suddenly start to see this:org.springframework.data.mapping.model.MappingInstantiationException: Failed to instantiate dev.monosoul.spring.data.mongo.issue.FieldType using constructor NO_CONSTRUCTOR with arguments.

The stack trace looks somewhat similar to this:

MappingInstantiationException stack trace

org.springframework.data.mapping.model.MappingInstantiationException: Failed to instantiate dev.monosoul.spring.data.mongo.issue.FieldType using constructor NO_CONSTRUCTOR with arguments

at org.springframework.data.mapping.model.ReflectionEntityInstantiator.instantiateClass(ReflectionEntityInstantiator.java:116)

at org.springframework.data.mapping.model.ReflectionEntityInstantiator.createInstance(ReflectionEntityInstantiator.java:53)

at org.springframework.data.mapping.model.ClassGeneratingEntityInstantiator.createInstance(ClassGeneratingEntityInstantiator.java:102)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.read(MappingMongoConverter.java:560)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.readDocument(MappingMongoConverter.java:526)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter$ConversionContext.convert(MappingMongoConverter.java:2290)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter$MongoDbPropertyValueProvider.getPropertyValue(MappingMongoConverter.java:1979)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter$AssociationAwareMongoDbPropertyValueProvider.getPropertyValue(MappingMongoConverter.java:2038)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter$AssociationAwareMongoDbPropertyValueProvider.getPropertyValue(MappingMongoConverter.java:1996)

at org.springframework.data.mapping.model.PersistentEntityParameterValueProvider.getParameterValue(PersistentEntityParameterValueProvider.java:75)

at org.springframework.data.mapping.model.SpELExpressionParameterValueProvider.getParameterValue(SpELExpressionParameterValueProvider.java:53)

at org.springframework.data.mapping.model.KotlinClassGeneratingEntityInstantiator$DefaultingKotlinClassInstantiatorAdapter.extractInvocationArguments(KotlinClassGeneratingEntityInstantiator.java:236)

at org.springframework.data.mapping.model.KotlinClassGeneratingEntityInstantiator$DefaultingKotlinClassInstantiatorAdapter.createInstance(KotlinClassGeneratingEntityInstantiator.java:210)

at org.springframework.data.mapping.model.ClassGeneratingEntityInstantiator.createInstance(ClassGeneratingEntityInstantiator.java:102)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.read(MappingMongoConverter.java:560)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.readDocument(MappingMongoConverter.java:526)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.read(MappingMongoConverter.java:462)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.read(MappingMongoConverter.java:458)

at org.springframework.data.mongodb.core.convert.MappingMongoConverter.read(MappingMongoConverter.java:120)

at org.springframework.data.mongodb.core.MongoTemplate$ReadDocumentCallback.doWith(MongoTemplate.java:3322)

at org.springframework.data.mongodb.core.MongoTemplate.executeFindOneInternal(MongoTemplate.java:2937)

at org.springframework.data.mongodb.core.MongoTemplate.doFindOne(MongoTemplate.java:2615)

at org.springframework.data.mongodb.core.MongoTemplate.doFindOne(MongoTemplate.java:2585)

at org.springframework.data.mongodb.core.MongoTemplate.findById(MongoTemplate.java:922)

at org.springframework.data.mongodb.repository.support.SimpleMongoRepository.findById(SimpleMongoRepository.java:132)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.springframework.data.repository.core.support.RepositoryMethodInvoker$RepositoryFragmentMethodInvoker.lambda$new$0(RepositoryMethodInvoker.java:289)

at org.springframework.data.repository.core.support.RepositoryMethodInvoker.doInvoke(RepositoryMethodInvoker.java:137)

at org.springframework.data.repository.core.support.RepositoryMethodInvoker.invoke(RepositoryMethodInvoker.java:121)

at org.springframework.data.repository.core.support.RepositoryComposition$RepositoryFragments.invoke(RepositoryComposition.java:530)

at org.springframework.data.repository.core.support.RepositoryComposition.invoke(RepositoryComposition.java:286)

at org.springframework.data.repository.core.support.RepositoryFactorySupport$ImplementationMethodExecutionInterceptor.invoke(RepositoryFactorySupport.java:640)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.data.repository.core.support.QueryExecutorMethodInterceptor.doInvoke(QueryExecutorMethodInterceptor.java:164)

at org.springframework.data.repository.core.support.QueryExecutorMethodInterceptor.invoke(QueryExecutorMethodInterceptor.java:139)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.data.projection.DefaultMethodInvokingMethodInterceptor.invoke(DefaultMethodInvokingMethodInterceptor.java:81)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.interceptor.ExposeInvocationInterceptor.invoke(ExposeInvocationInterceptor.java:97)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.data.repository.core.support.MethodInvocationValidator.invoke(MethodInvocationValidator.java:99)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.JdkDynamicAopProxy.invoke(JdkDynamicAopProxy.java:215)

at jdk.proxy2/jdk.proxy2.$Proxy77.findById(Unknown Source)

...

Caused by: org.springframework.beans.BeanInstantiationException: Failed to instantiate [dev.monosoul.spring.data.mongo.issue.FieldType]: Specified class is an interface

at org.springframework.beans.BeanUtils.instantiateClass(BeanUtils.java:143)

at org.springframework.data.mapping.model.ReflectionEntityInstantiator.instantiateClass(ReflectionEntityInstantiator.java:112)

... 122 moreCode language: Properties (properties)The root exception there is:org.springframework.beans.BeanInstantiationException: Failed to instantiate [dev.monosoul.spring.data.mongo.issue.FieldType]: Specified class is an interface.

That is quite puzzling, right? Why did it work locally in the test and worked for some time after deployment but then suddenly started failing?

Well, I’m going to save you the troubleshooting time I wasted myself.

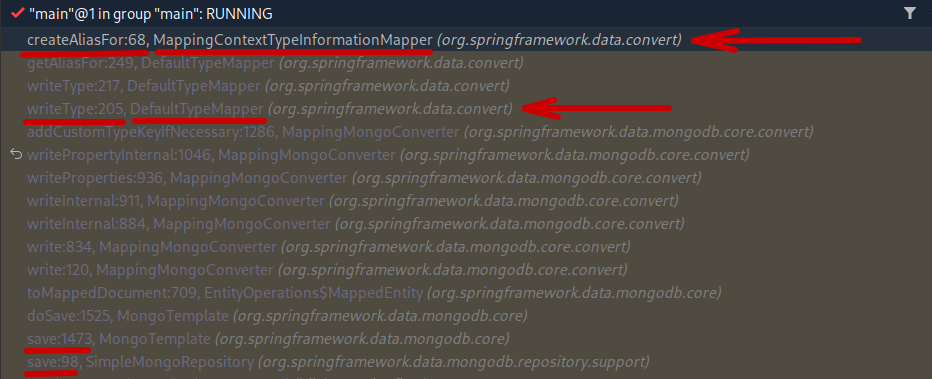

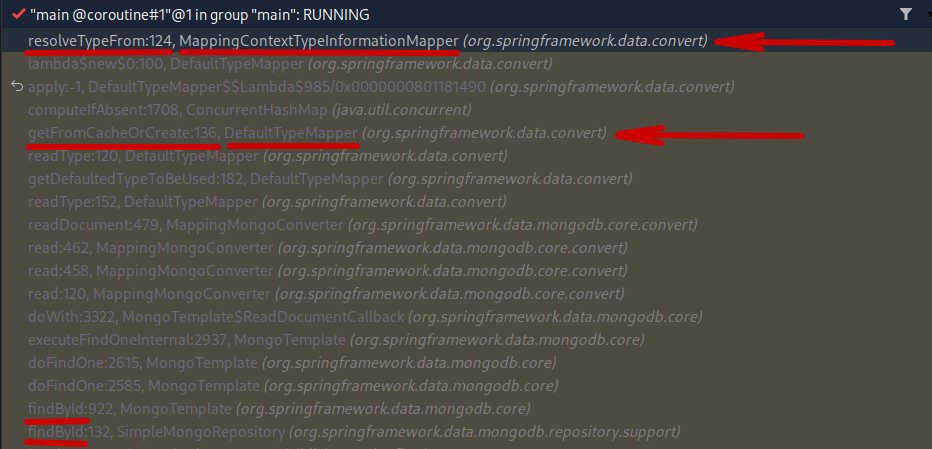

DefaultTypeMapper is to blame

Kind of. There are actually multiple classes involved. DefaultTypeMapper is the class responsible for type mapping when saving/reading data to/from the DB. If you put a break point at the lines 198 (method writeType) and 128 (method getFromCacheOrCreate) in this class and run the test again, you’ll notice method writeType is called when saving the entry. This method delegates to MappingContextTypeInformationMapper that computes the mapping and saves it internally.

Once you’re past the first break point and get to the second one (when getting the document from the repository), you’ll notice method getFromCacheOrCreate is called when getting the entry. If DefaultTypeMapper‘s cache is empty this method delegates again to MappingContextTypeInformationMapper to resolve the alias, which in turn resolves the type from it’s internal map.

Revelation

A-ha! So this is what happens! When we save the document first it’s alias to type mapping gets cached and when we try to get it after that it deserializes fine. But if we try to get the document before saving it we’ll get the exception! The mapper can’t deduce the type based on just the alias, it doesn’t know about the type so it tries to use the type of the field in the document! And in our case the field type is dev.monosoul.spring.data.mongo.issue.FieldType, which is an interface!

So the app only worked until the next deployment happened! Once we deploy the new instance of the app doesn’t have the mapping in cache to resolve the type (until we save the document again).

The root exception we got before makes perfect sense now. But how can we reproduce it locally? We need to cover it with a test before we start fixing it to prevent regressions in the future, right?

Luckily, we can do that.

Writing a better test

To properly test the scenario of getting the document before it got saved at least once we can use MongoTemplate. We will create an instance of DBObject with BasicDBObjectBuilder and save it using MongoTemplate thus avoiding any type mapping and caching magic of Spring Data.

@DirtiesContext(classMode = BEFORE_CLASS) // required to prevent spring from caching the type in the other test

class SaveWithTemplateAndGetWithRepositoryTest @Autowired constructor(

private val repository: DocumentRepository,

private val mongoTemplate: MongoTemplate,

) : AbstractRepositoryTest() {

@TestFactory

fun `should save the document and then get it`() = sequenceOf(

ObjectId.get().let { id ->

id to buildDbObject {

this["_id"] = id

this["_class"] = "document"

this["interface_field"] = buildDbObject {

this["_class"] = "field_type_impl"

this["some_int_field"] = 123

this["some_string_field"] = "qwe"

}

}

},

...

).map { (id, document) ->

dynamicTest("should save $document and then get it") {

// given

mongoTemplate.save(document, "document")

// when & then

expectCatching {

repository.findById(id) // will throw MappingInstantiationException

}.isSuccess().isPresent().get { this.id } isEqualTo id

}

}.asStream()

private fun buildDbObject(block: BasicDBObjectBuilder.() -> Unit) = BasicDBObjectBuilder.start().apply(block).get()

private operator fun BasicDBObjectBuilder.set(field: String, value: Any) = add(field, value)

}

Code language: Kotlin (kotlin)There are a few important things here:

L1: We mark the test asDirtiesContext. This is crucial to make sure the test is always reproducible, otherwise other tests we have might save the document and create an entry inMappingContextTypeInformationMapperthus making this test always work regardless of how we save the document here.L2: In addition to the repository bean we also injectMongoTemplate.L9-20: We create a pair (or a tuple) of object id to an instance ofDBObject. The objects we create here have exactly the same values as the objects we create for testing inSaveAndGetWithRepositoryTest.L24: We save the document into the collection.L33-34: simple DSL to easeDBObjectcreation.- The rest is similar to

SaveAndGetWithRepositoryTest.

You can check the full class on GitHub.

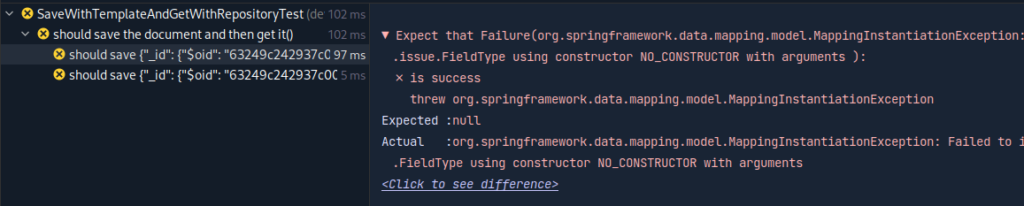

Let’s try to run the test.

Perfect! It has failed with the exact exception we expected.

Wait, but are you sure?

I know what you might be thinking. Are we sure of the issue? Maybe the documents that actually get saved to the DB are different?

To make it a bit more convincing for you, AbstractRepositoryTest has a tearDown method where it prints all documents from the collection after every test.

Here’s what we get after running both SaveAndGetWithRepositoryTest and SaveWithTemplateAndGetWithRepositoryTest:

Documents in the DB

d.m.s.d.m.i.SaveAndGetWithRepositoryTest - Document in DB: {"_id": {"$oid": "63249ce7adb06e3c0466cc1e"}, "interface_field": {"some_int_field": 123, "some_string_field": "qwe", "_class": "field_type_impl"}, "_class": "document"}

d.m.s.d.m.i.SaveAndGetWithRepositoryTest - Document in DB: {"_id": {"$oid": "63249ce7adb06e3c0466cc1f"}, "interface_field": {"some_int_field": 456, "other_string_field": "asd", "_class": "other_field_type_impl"}, "_class": "document"}

d.m.s.d.m.i.SaveWithTemplateAndGetWithRepositoryTest - Document in DB: {"_id": {"$oid": "63249ce7adb06e3c0466cc21"}, "_class": "document", "interface_field": {"_class": "field_type_impl", "some_int_field": 123, "some_string_field": "qwe"}}

d.m.s.d.m.i.SaveWithTemplateAndGetWithRepositoryTest - Document in DB: {"_id": {"$oid": "63249ce7adb06e3c0466cc22"}, "_class": "document", "interface_field": {"_class": "other_field_type_impl", "some_int_field": 456, "other_string_field": "asd"}}Code language: JSON / JSON with Comments (json)As you can see even though the field order is different, the values in the documents are the same.

So yes, I am sure. 🙂

Now let’s get to…

The solution

If you try searching for the exception on Google, you might stumble across this question on StackOverflow or this issue on GitHub.

Both places propose implementing a converter as the soltuion. Let’s try that out.

Converter

Basically with this solution we will do deserialization ourselves field by field. Here’s how it would look like:

@ReadingConverter

class FieldTypeConverter : Converter<Document, FieldType> {

override fun convert(source: Document): FieldType? = when (source.getString("_class")) {

"field_type_impl" -> {

FieldTypeImpl(

someIntField = source.getInteger("some_int_field"),

someStringField = source.getString("some_string_field"),

)

}

"other_field_type_impl" -> {

OtherFieldTypeImpl(

someIntField = source.getInteger("some_int_field"),

otherStringField = source.getString("other_string_field"),

)

}

else -> null

}

}

Code language: Kotlin (kotlin)The implementation is pretty straightforward:

L3: We read the type alias from the Document object.L4: When the alias isfield_type_implwe instantiateFieldTypeImpl.L11: When the alias isother_field_type_implwe instantiateOtherFieldTypeImpl.L18: When the alias value is unkown we return null (throwing an exception might be an option here as well).

We also need to change MongoDbConfig a little bit to use the new converter:

override fun customConversions() = MongoCustomConversions(

listOf(

FieldTypeConverter()

)

)Code language: Kotlin (kotlin)The full example is available on GitHub.

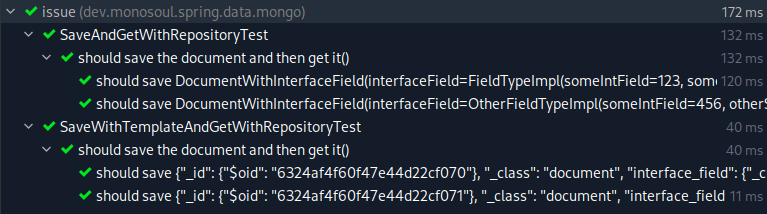

Let’s try to run the tests.

Perfect! All green.

But I don’t really like that solution. It requires you to manually deserialize every polymorphic field. Not only it is an error prone approach on it’s own, it also requires anyone who changes the models to not forget to update the converter. Quite unrealiable. Luckily that’s not the only solution available! Let’s try other approaches.

Explicit type mapping declaration

Another thing that we can do is to configure DefaultMongoTypeMapper with explicit alias to type mappings. To do that we will take advantage of ConfigurableTypeInformationMapper. We will need to change our MongoDbConfig a bit:

@Configuration

...

class MongoDbConfig : AbstractMongoClientConfiguration() {

...

@Bean

fun configurableTypeInformationMapper() = ConfigurableTypeInformationMapper(

mapOf(

FieldTypeImpl::class.java to "field_type_impl",

OtherFieldTypeImpl::class.java to "other_field_type_impl",

)

)

override fun mappingMongoConverter(

databaseFactory: MongoDatabaseFactory,

customConversions: MongoCustomConversions,

mappingContext: MongoMappingContext

) = super.mappingMongoConverter(databaseFactory, customConversions, mappingContext).apply {

setTypeMapper(

DefaultMongoTypeMapper(

DEFAULT_TYPE_KEY,

listOf(

configurableTypeInformationMapper(),

SimpleTypeInformationMapper(),

)

)

)

}

Code language: Kotlin (kotlin)A few important things to note here:

L1: We removeproxyBeanMethods = falseargument from@Configurationannotation. This is needed forconfigurableTypeInformationMapper()to return a singleton bean instead of a new object whe we call it within the config class.L7-12: We declareConfigurableTypeInformationMapperbean with 2 mappings for each ofFieldTypeimplementations.L14-28: We configureDefaultMongoTypeMapperto useConfigurableTypeInformationMapperalong withSimpleTypeInformationMapper.

The full example is available on GitHub.

This solution is better than the converter one since it doesn’t force us to manually deserialize each polymorphic field. But it is still not perfect, as you might forget to add a mapping here when adding a new polymorphic field. I’d like to have something I can configure once and forget about it.

And I have a solution that will work this way. 🙂

Automated type mapping with reflection

First we will need to add a new dependency to the project. We’re going to use the reflections library. Pick the latest version for the build tool of your choice here.

Now we’re going to create a small extension to DefaultMongoTypeMapper to make it easy to configure and instantiate. Here’s how it would look:

class ReflectiveMongoTypeMapper(

private val reflections: Reflections = Reflections("dev.monosoul.spring.data.mongo.issue")

) : DefaultMongoTypeMapper(

DEFAULT_TYPE_KEY,

listOf(

ConfigurableTypeInformationMapper(

reflections.getTypesAnnotatedWith(TypeAlias::class.java).associateWith { clazz ->

getAnnotation(clazz, TypeAlias::class.java)!!.value

}

),

SimpleTypeInformationMapper(),

)

)

Code language: Kotlin (kotlin)Pretty minimalistic, isn’t it?

Here’s what happens there:

L1: We create an instance ofReflectionspointing it todev.monosoul.spring.data.mongo.issuepackage, so it will only scan classes in that package.L7: We get all classes annotated with@TypeAlias.L8: We map each of those classes to the value of@TypeAliasannotation. I.e. we create a map of type to alias.L3-L13: We instantiateDefaultMongoTypeMappersimilar to how we did that with explicit type declaration. The only difference is that we get the mappings automatically via reflection.

We will also have to change MongoDbConfig like this:

override fun mappingMongoConverter(

databaseFactory: MongoDatabaseFactory,

customConversions: MongoCustomConversions,

mappingContext: MongoMappingContext,

) = super.mappingMongoConverter(databaseFactory, customConversions, mappingContext).apply {

setTypeMapper(ReflectiveMongoTypeMapper())

}Code language: Kotlin (kotlin)Here we just make Spring use the new type mapper similar to explicit type declaration.

The full example is available on GitHub.

Let’s run the tests again.

Awesome! Everything works and if we add a new polymorphic field we woudln’t have to change any configuration. Moreover, we only use reflection during context startup, it is not used in runtime, so it won’t affect performance anyhow.

Summary

So, what can we take away from all that?

- When writing integration tests for repositories it’s better to avoid saving objects using the same repository you’re testing, even if you’re testing a different method. E.g.: if you test

savemethod, try to not use the same repository’sgetmethod to validate the object got saved properly. And if you testgetmethod, it’s better not to usesavemethod to persist the objects first. - Might be a good idea to not share the Spring context between tests for different repository methods. Because even if you follow the previous advise, it doesn’t guarantee other tests won’t affect execution. I.e. make use of

@DirtiesContext. - There are multiple ways to solve the issue, pick the one that suits your needs best. In my opinion the last solution is the most error-proof one.

Happy hacking!

4 thoughts on “Polymorphic fields with MongoDB and Spring Data”

Thank you for sharing this work! I would love for this to be part of the Spring framework, because it seems like such a basic thing. Would you mind suggesting this in a pull request for spring-data-mongodb?

Hey Sebastian, glad it helped you! Atm I don’t have enough free time to contribute it there, also I don’t think they’d accept it for various reasons.

I gave up reading your post after I realized all the code examples were in Kotlin. It was especially jarring to try to read because nowhere did you say you were using Kotlin, but you did mention Spring, which made me assume Java. Please just use Java, especially when writing about Spring.

Hey Bob, thanks for the feedback and sorry it got you confused. I’ve been using Kotlin exclusively for the past 4 years both at work and in my personal projects, so it’ll be hard for me to use Java examples here. But I’ll make sure to explicitly mention the language.